Dropbox Developer Platform

As the Lead Product Designer on the CI Tools team, I accomplished the design of a customized developer tooling platform that streamlined engineering workflows. The fragmented experience of third-party tools presented an opportunity to create a unified solution tailored to Dropbox needs. The outcome was a more functional, visually coherent, and scalable platform that reduced time on task, led to a 60% decrease in support tickets, and achieved a 90% user satisfaction score. This translated into measurable gains in engineering efficiency for the business.

Problem

Based on the volume of complaints coming from engineers through a dedicated Slack feedback channel, 1:1 manager check-ins, and discovery user interviews, we became aware of pain points that slowed down the engineering workflow and impacted the business performance.

Objective

Improve the speed of development cycles and code integration by creating a unified platform that consolidates all dev tools. Make it flexible and scalable to accommodate for future functionality.

Establish an intuitive, user-centered and consistent experience by enhancing information architecture, interactions and data display across tools and adhering to the Dropbox Design System visual language.

My role

Shaped product strategy by prioritizing features.

Conducted qualitative and quantitative user research and iterated on concepts and prototypes.

Validated solutions with engineering stakeholders and the design system team.

Delivered high-fidelity mockups and supported engineers through implementation.

Conducted pre-launch QA and classified defects.

Collaborators: Engineering leadership, Code Workflows engineering team

Understanding the user

Personas and workflow

I defined 3 main personas and a high level developer workflow.

New Droplets

Engineers that started at Dropbox in the last few months.

AI / ML Devs

Engineers with a longer tenure at Dropbox that build with AI and ML.

API Devs

Engineers who design, build, and maintain APIs.

Current state

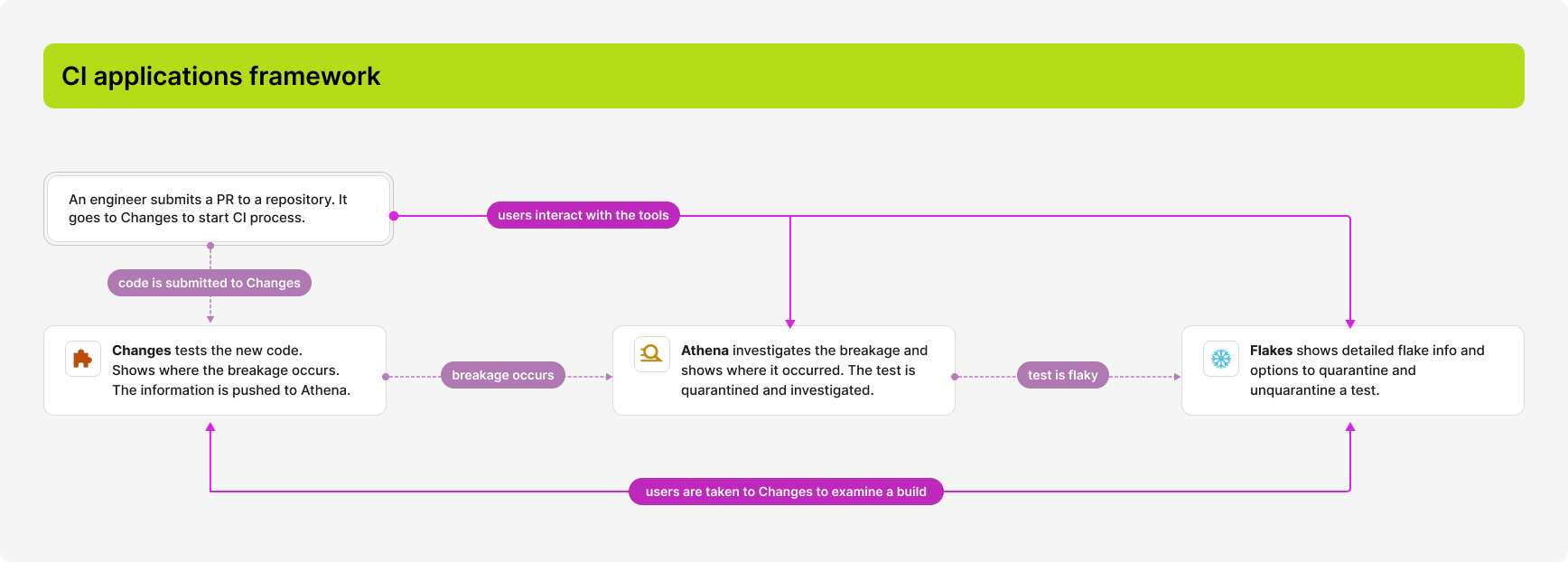

Engineers have established a basic framework and built three CI applications, Changes, Athena, and Flakes with basic UI representations. I started with identifying a high level engineering workflow across those touchpoints.

User feedback

Users viewed the existing framework as a significant improvement but complained that the UX was poor. It took them a long time to find a test information and switching between the apps was cumbersome.

Flakes application design

Of all the applications Flakes was the most critical in the engineering workflow and was prioritized as the first deliverable.

Challenge

Design an app that will enable developers discover flaky test information and take action easily and quickly.

User tasks

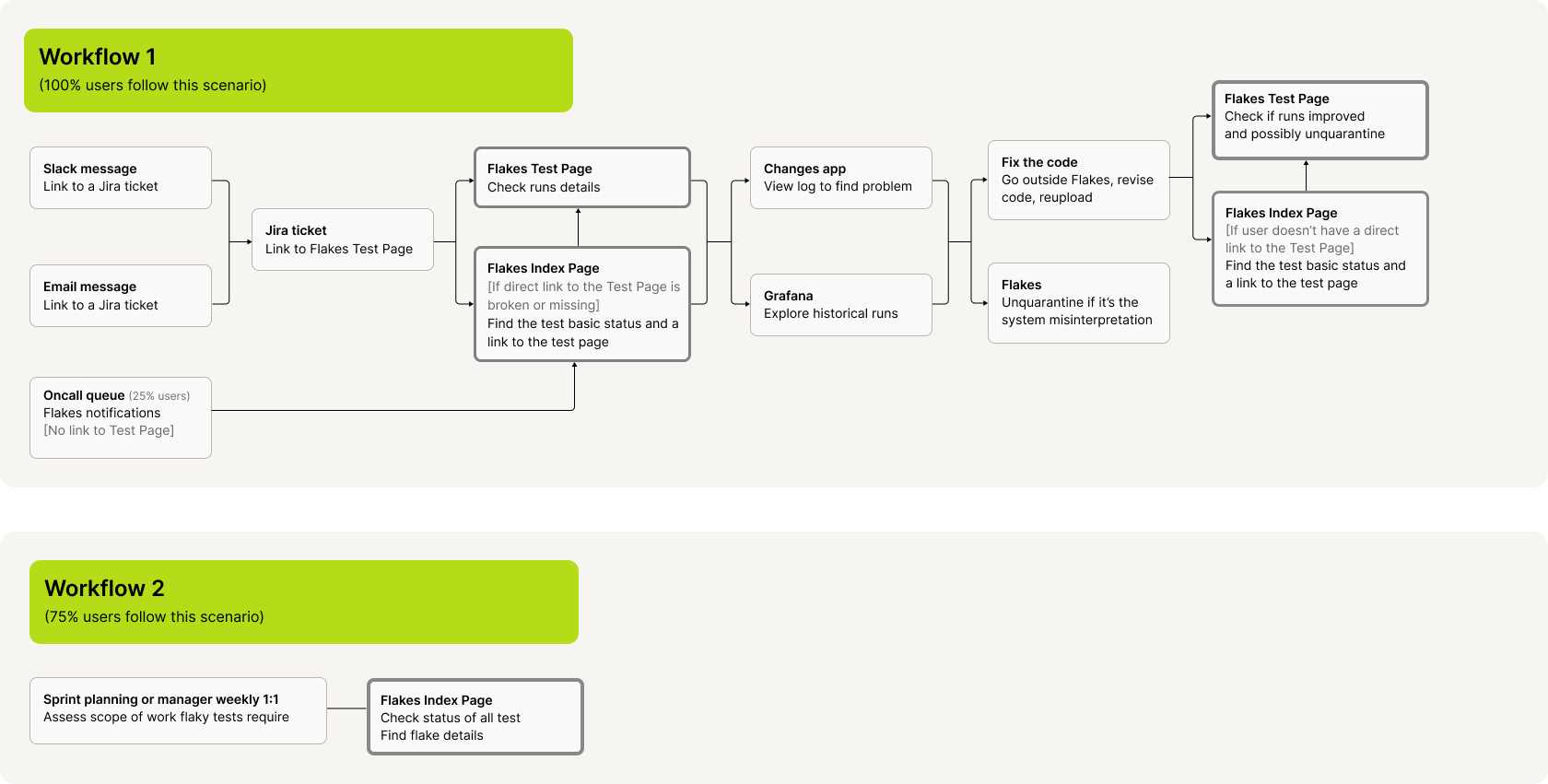

I started with discovery interviews with developers to map out a typical day in their life. Based on it, I outlined entry points and key actions Flakes should support. The main Index Page/Dashboard with enhanced findability and a Test Detail Page emerged as the core surfaces in the flow.

Requirements / Acceptance criteria

Users have a cross-application path to easily switch between tools in the workflow.

Users can quickly check test status and Jira tasks, find details of runs and build logs, and quarantine/unquarantine tests.

Dashboards with test data display are clear and actionable.

UX, information architecture, navigation and interactions are consistent, aligned with the Dropbox design system and scalable for future growth.

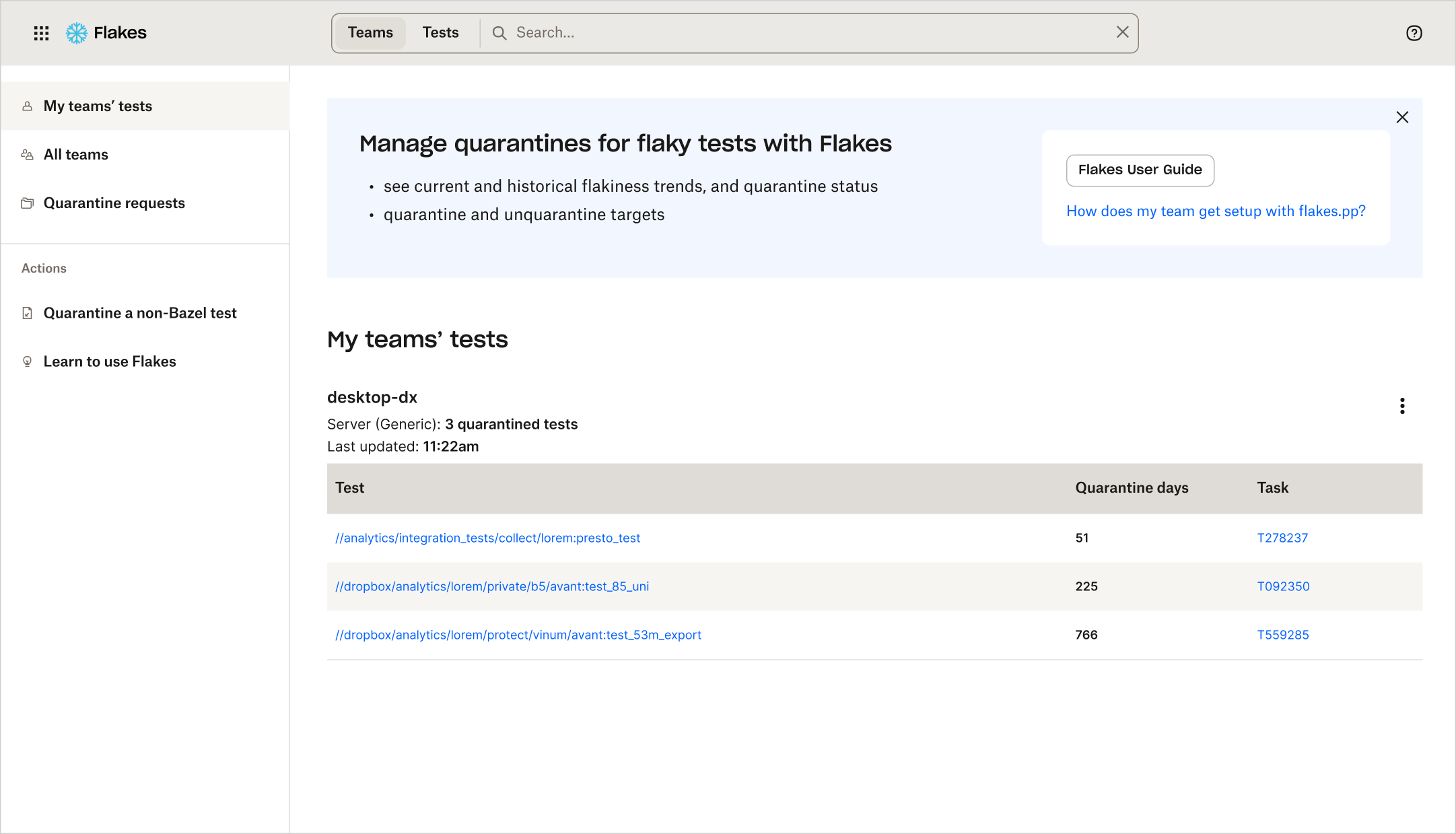

MAIN DASHBOARD

Concepting

The existing Index Page UI built by engineers provided basic functionality but left ample room for improvement.

I explored several solutions to help developers find their test information faster by personalizing content and improving the clarity of interactions and test dashboards.

Designed a global header pattern with a flexible layout and functionality that could be implemented across the platform, including an app switcher and unified search within a single search field.

Created a global Flakes left nav dividing links into informational and action items to increase searchability and scalability, and to provide a fast access to actions.

Replaced the Help text button with a standard icon-only button. Removed the ambiguous links from the page header, moved them to the left navigation actions section and provided clearer labeling.

Customized the main page content for the first time users by displaying a dismissible intro banner with links to additional, learning resources.

Customized the main page for the users by providing dashboards listing their teams’ tests with essential data they requested: a link to test details, quarantine days and a link to a Jira ticket.

Aligned design with the Dropbox design system existing components and created new variants.

Engineering feedback

• We may not always be able to detect what team the user belongs to and show their team’s tests.

• Some users may want to see multiple teams’ tests.

Iterations

I addressed the additional requirements with tools that gave the user more control over displayed teams.

I added a team selection feature that allows users to chose and modify which teams they want to see on their home page.

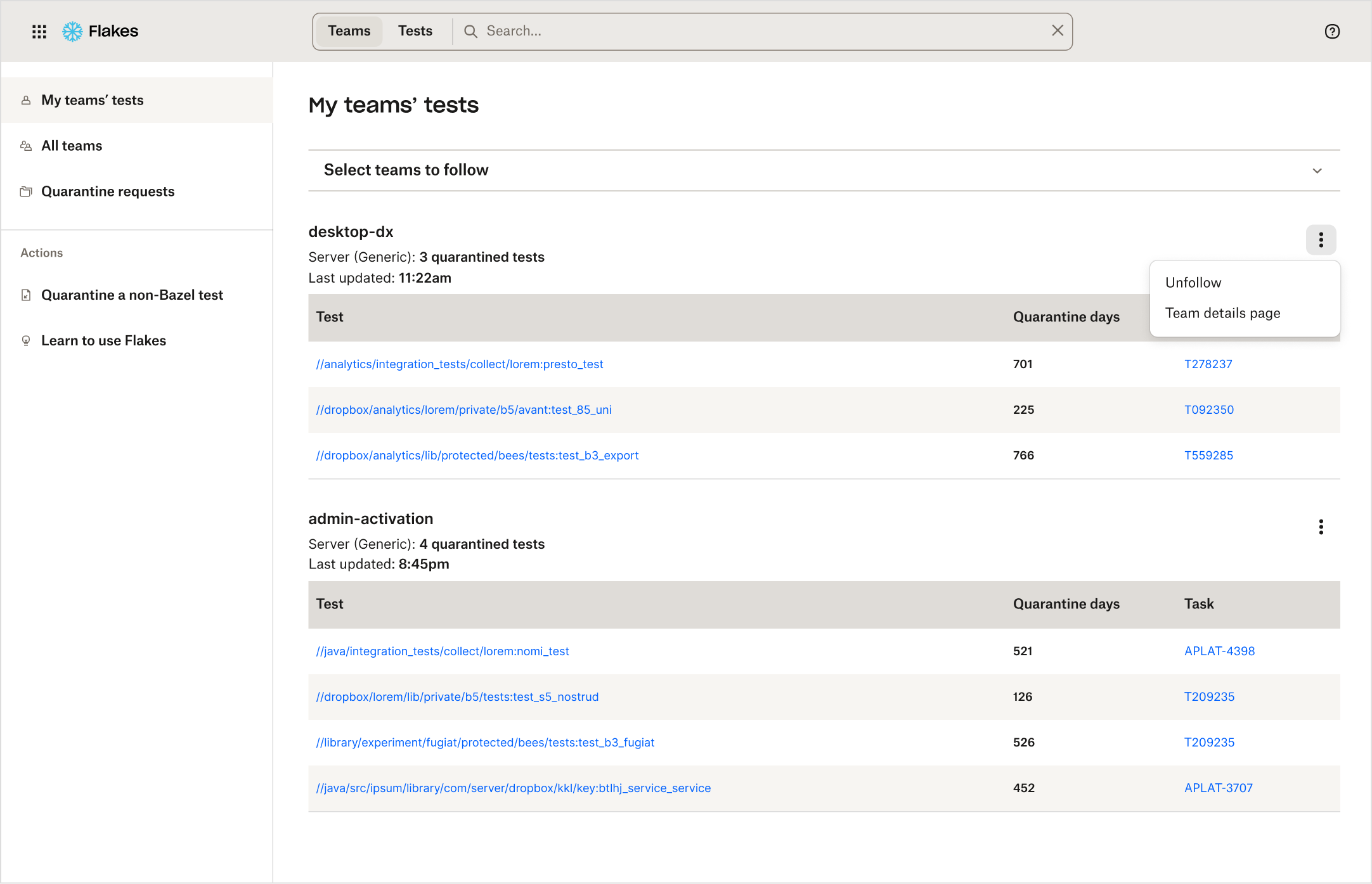

I introduced an option to unfollow the team in the ‘More” dropdown in the list header. If the list is reused on other pages where the team selection feature is not be available, the dropdown will still allow for a basic customization of the view.

I mocked up a multiple teams display option.

Scenario 1: We detected user’s team(s)

We display data about their team(s), tests and Jira reference. We still provide a team selection feature where users can modify the selection. Because this action is secondary in this context, I minimized its prominence by showing the “Select your team CTA only and revealing the select field on demand ( on click on the down caret). To support contextual and scalable team management, I introduced an “Unfollow” action within the list-level menu.

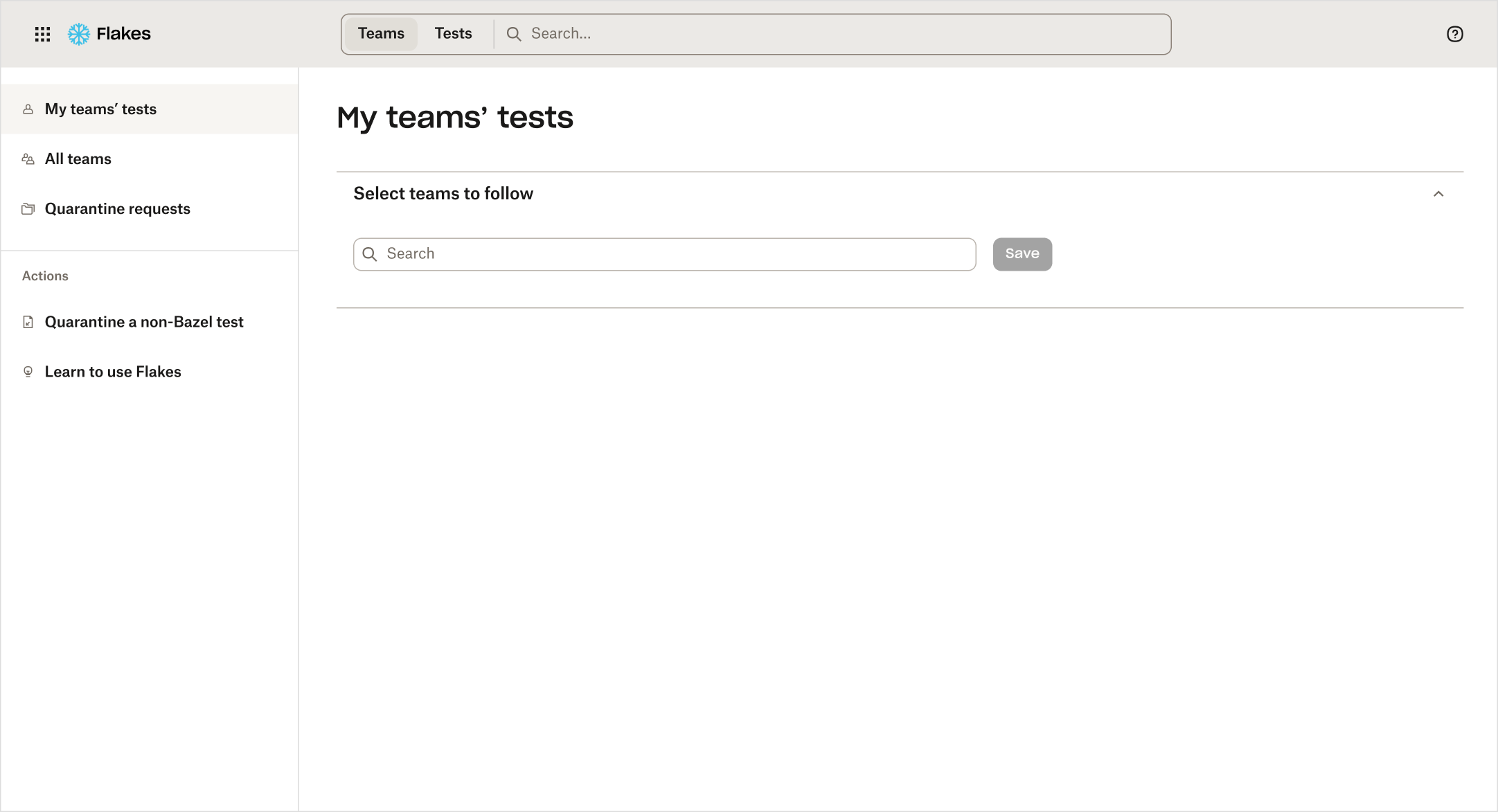

Scenario 2: We didn’t detect user’s team(s)

We can’t display their team(s). The team selection feature is more prominent and unfolded by default as it becomes the primary action on the page.

TEST DETAIL PAGE

Requirements

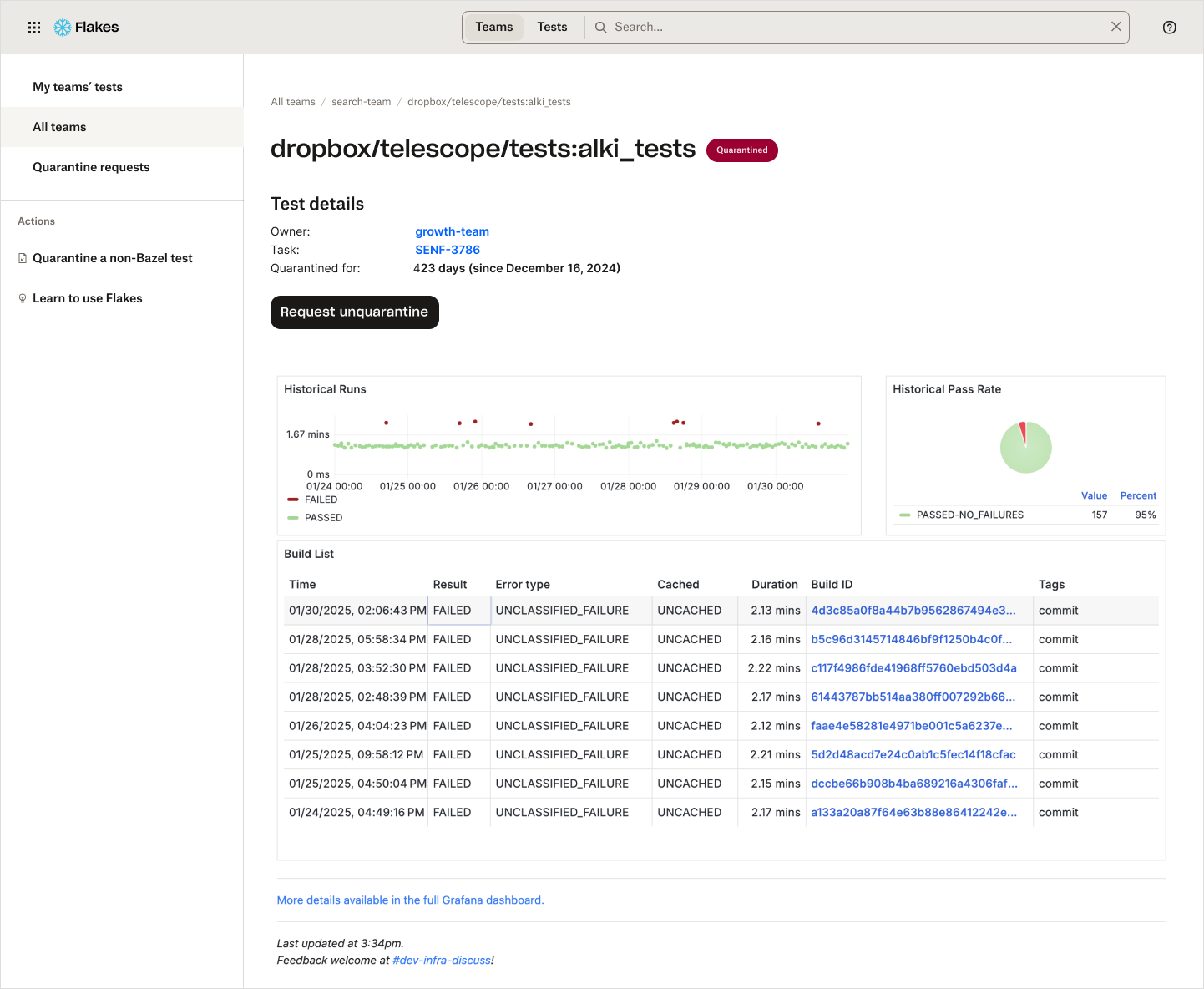

As a central workflow point, the test detail page had to show essential test information and action paths, which we defined through discovery interviews with engineers.

Display identifiers: test url that can be copied, owner, Jira task, quarantine status.

Provide access to check runs, pass rate and build logs.

Allow to quarantine or unquarantine a test.

Technical constraints

Data about the runs from cannot be pulled into Flakes. We can only link to it.

Solution

Through iterations guided by user validation and feedback, I arrived at an optimum solution given the technical constraints.

I grouped the identifiers and the quarantine action in one place at the high-visibility top area of the page. I emphasized the the test status with a pill placed next to the test name.

I included the Quarantine/Unquaratine status change button close to the status indicator pill in the high-visibility section.

For exploring historical runs and pass rates we were able to pull basic data from third-party apps and display it in clickable iFrames. We presented build data in a dashboard with deep links to build logs in Changes. Given the technical constraints, we adopted this as the MVP and planned to address it with post-launch user research and possible further refinement.

Post launch user research

Goals

We wanted to learn if users can successfully accomplish essential tasks, discover the key drivers of friction, and get feedback on how useful the current features are and ideas for new ones. Insights from this research will inform the product strategy in addition to responding to user feedback via Slack.

Methodology

I guided 8 users through 15-minute sessions. I began with questions about how and when they use the product, followed by scripted tasks. I observed how they interacted with the tool, and where they got stuck. This led to an evaluation of the app, and a discussion of future ideas.

Metrics

Value for the user, Task completion rate, Time on task/ Error rate/Efficiency, and Satisfaction score.

Findings:

Flakes has high value and dependency for engineers. 100% used over the last month. 75% use on a weekly basis.

My team’s tests list and the “follow a team” feature were highly appreciated. 75% will use it at sprint planning, team weekly, or manager 1:1.

100% completed the task but 75% found it confusing at some point. Main friction was the Test Detail Page. Users found it hard to explore historical runs and pass rate pulled from Grafana. Build info is not surfaced in Flakes. It takes a long time to browse through logs.

50% of users rated the experience at 5 and the other 50% of users rated the experience at 4 on the scale 0-5.

The main reasons for the lower score was Test details page data access.Users particularly liked:

My team’s test and follow my team feature

Modern, clean look

Findability, overall ease of use

Results and next steps

User testing showed a 90% satisfaction score. In the post-launch weeks the volume of complaints and defect reports collected via Slack and the in-app feedback tool dropped by 60%.

The research confirmed that the primary issue impacting task performance was the difficulty accessing information on the test detail page. Drawing from engineers’ comments, I recommended including those improvements in the product roadmap:

Builds: Pull a summary from the log and surface it in Flakes, or provide a shortcut that takes the user directly to the failure in the log. Deep link to the thing that actually failed in Flakes.

Historical runs, pass rates: “Explore” buttons could open a larger container instead of being embedded in the tiny window.

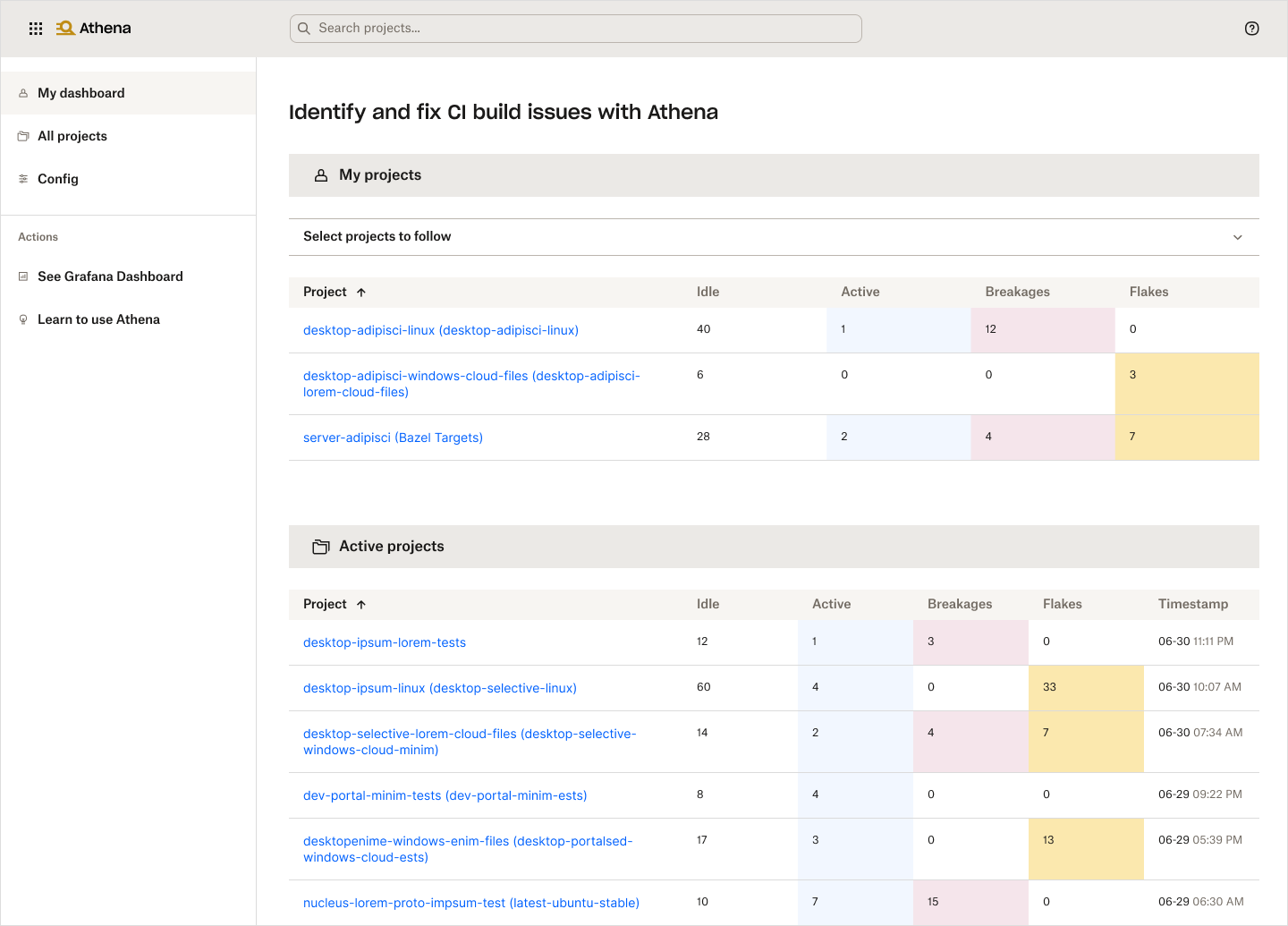

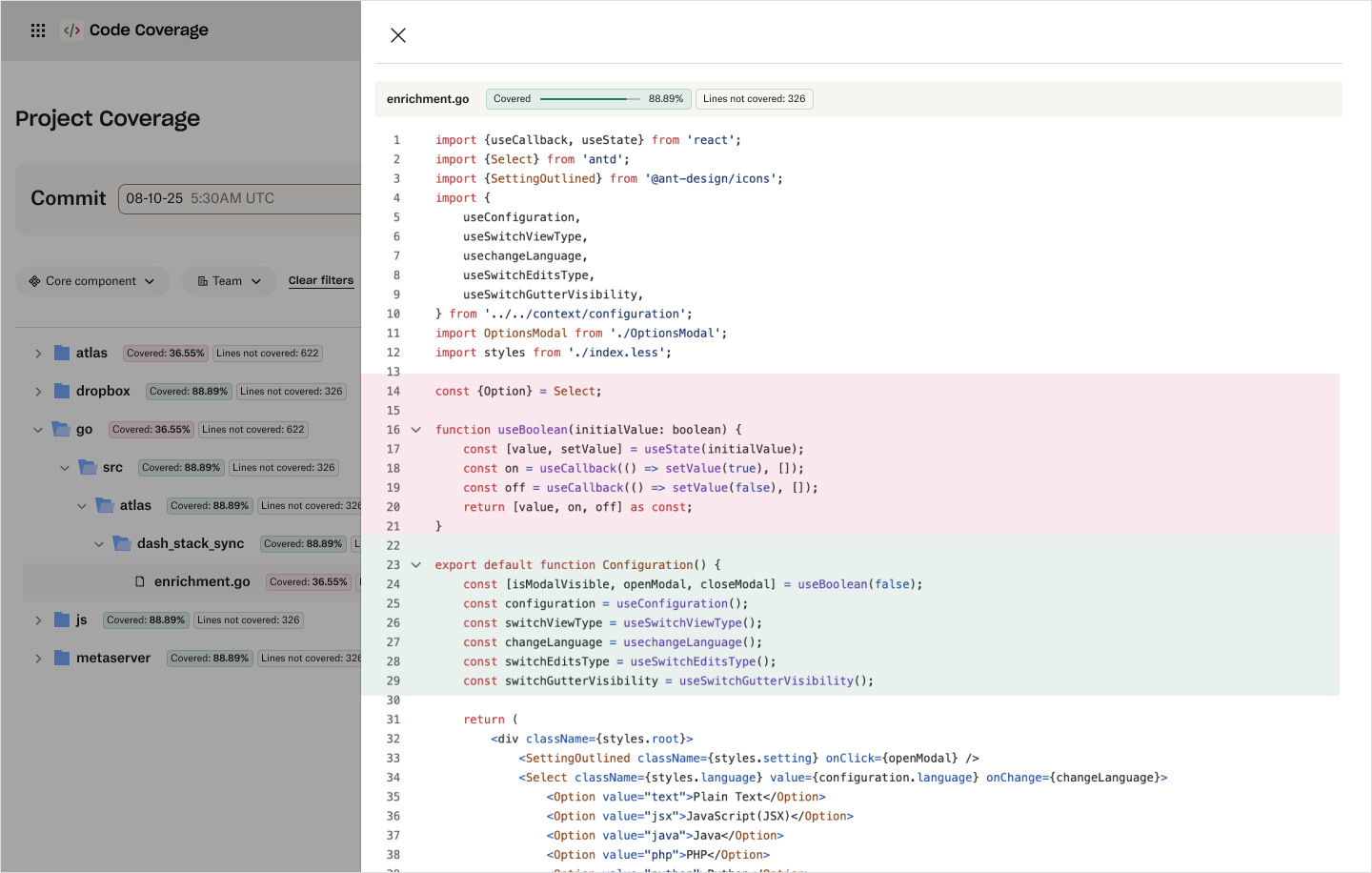

Athena and Code Coverage applications design

I proceeded to expand the developer platform and to connect and automate other key workflows, balancing design consistency and scalability with the unique UX each tool required. Athena and Code Coverage that followed steadily increased engineer satisfaction and resulted in faster delivery, higher-quality code, and greater time efficiency.